The Silent Assassin: The Short Shot

Machines Do Not Lie, They Only Remain Silent

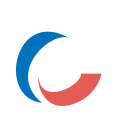

9:30 AM. Injection Molding Factory No. 3, Line B. The massive 200-ton injection molding machine spits out a rhythmic roar. Thump— Hiss, Thump— Hiss. This sound is the heartbeat of the factory. But today, a subtle arrhythmia was detected in that beat.

Inspection Foreman tossed a plastic part onto my desk. “It’s started again. The ends aren’t filled.”

‘Short Shot’. A molding defect where the molten plastic solidifies before reaching the end of the mold. Field engineers call this defect a ‘ghost.’ The causes are simply too varied.

Foreman takes off his oil-stained gloves and asks. “Is the barrel temperature too low, or is the injection pressure lacking? Should we tear apart the heater?”

In the field, decisions are usually made by ‘Gut Feeling’. “It’s cold today, so it must be a temperature issue.” And if that feeling is wrong, millions of won in mold modification costs vanish into thin air.

I shook my head. “No, yet. Not sure yet. Let’s check futher.”

I picked up a blank notebook. I took out my weapon, ‘Bayesian Inference.’ Bayesian mathematics is the process of finding the hot truth through cold data—it is precisely the record of ‘Bayesian Update.

I decide to become a Detective for a moment, chasing a suspect to find the culprit. The culprit is inside the factory. Two Suspects immediately came to my mind.

Suspects

- Suspect A: Temperature (HT)

- Profile: Volatile personality. If the barrel temperature ‘hunts’ (fluctuates), the resin hardens.

- Signature: When this guy is the culprit, the defect rate skyrockets to 8% (p=0.08).

- Suspect B: Pressure (HP)

- Profile: Lacks strength. Weak holding pressure fails to push the material to the end.

- Signature: This guy is relatively quiet, with a defect rate of around 4% (p=0.04).

Empirical intuition and suspicion, prior knowledge (Prior: Prior Probability)

The culprit is one of the two. However, I cannot hastily stop the machine and tear open the heater. Looking at the records of the last quarter from the MES (Manufacturing Execution System), 60% of short shot incidents were the doing of the ‘Temperature’ guy.

“Looking at the past criminal records. The probability is high that it is the Temperature guy this time as well.”

The Prior Belief in my head is set. I started to record the data in the notebook.

Prior Belief

- Probability of Temperature P(HT) = 0.6 (Prime Suspect)

- Probability of Pressure P(HP) = 0.4

Pros prefer to use ‘Odds’ rather than probabilities.

$$\text{Prior Odds} = \frac{0.6}{0.4} = \mathbf{1.5}$$

(Interpretation: Currently, I am betting 1.5 times more money on the side that Temperature is the culprit than Pressure.)

The First Evidence: The Morning Raid (Update 1)

“Foreman, let’s randomly inspect just 50 items produced right now.”

At 10 AM, the first evidence (D1) spilled onto the desk. Out of 50 samples, 5 are short shots. (n = 50, k = 5)

“5 out of 50… a defect rate of 10%?”

For a moment, my spine went cold. If it were a Pressure problem (p = 0.04), it would be normal for at most about 2 out of 50 to appear. It is too violent to be that guy’s doing. 5 is too many. Rather, it is closer to the Signature of the violent Temperature (8% defect rate) guy.

Here, Bayes’ core weapon, Likelihood, appears. “Who must be the culprit for this evidence (5/50) to make more sense?”

I quickly calculated the Bayes Factor, that is, the ‘weight of evidence.

$$\text{Bayes Factor} = \frac{P(D|H_T)}{P(D|H_P)}$$

$$\approx \left(\frac{0.08}{0.04}\right)^5 \times \left(\frac{0.92}{0.96}\right)^{45}$$

$$= 2^5 \times (0.9583)^{45}$$

$$= 32 \times 0.147$$

$$\approx \mathbf{4.7}$$

4.7 times.

This data (5 defects out of 50) supports the Temperature hypothesis 4.7 times more strongly than Pressure.

Now I must Update my belief. I multiply [my original belief (1.5)] by [the power of the evidence (4.7)]

Bayes Update (Posterior 1): Rapid rise of confidence

The existing Odds (1.5) are combined with the evidence (1.55).

$$\text{New Odds} = 1.5 \times 4.7 = \mathbf{7.05}$$

Converting this to probability:

$$P(H_T|D_1) = \frac{7.05}{1+7.05} \approx \mathbf{87.6\%}$$

“New Conviction: The probability that Temperature is the culprit skyrocketed from 60% → 87.6%. The data is screaming, “The culprit is Temperature!”

“The probability that it is a heater-side problem is nearly 90%! Foreman, have the maintenance team stand by. We are going in for a barrel temperature check!”

My voice was filled with conviction. Intoxicated by the sense of victory, I took a sip of coffee.”

The Second Evidence: Bayes’ Counterattack (Update 2)

The highlight of this scenario starts now. Many people mistake Bayes for ‘calculating once and being done with it.’ However, the true power of Bayes lies in accumulation (Update Loop).

2 PM. Just before the maintenance team arrived, the foreman brought the 2nd sample (D2) with a strange expression. “I pulled 50 more after lunch… it is strange.”

“Team Leader, I sampled another 50 after lunch… and it’s strange.”

[Out of a total of 50, 1 defect occurred]

“What? 1 piece?”

I heard the sound of my conviction cracking. If the real culprit is the violent Temperature (usually 8%), 1 out of 50 (2%) is too few. Rather, this is the kind of thing the quiet Pressure (usually 4%) guy would do.

If the real culprit was the violent Temperature (usually 8%), 1 out of 50 (2%) is too low. Rather, this is something the quiet Pressure (usually 4%) guy would do.

The data is shouting. “The culprit might not be Temperature!”

Now, the magic of Bayesian inference begins. The 87.6% conviction (Posterior) I had earlier. That does not disappear. It becomes a New Prior for the afternoon’s inference.

[Morning’s conclusion = Afternoon’s start] This is the way Artificial Intelligence, and we, learn the world. I started calculating again. I verify the evidentiary power of the data (D2) by immediately updating the morning’s calculation result (posterior probability) as the prior probability for this calculation.”

$$\text{Bayes Factor}_2 \approx \left(\frac{0.08}{0.04}\right)^1 \times \left(\frac{0.92}{0.96}\right)^{49}$$

$$= 2 \times 0.122$$

$$\approx \mathbf{0.244}$$

The value is far smaller than 1. This means it is “evidence unfavorable to the Temperature hypothesis.” To be precise, the second evidence supports the Pressure hypothesis about 4 times more. It is tremendous counter-evidence. I heard the sound of the morning’s conviction crumbling.

Bayes 2nd Update (Posterior 2): Bayes’ Judgment, Humbled Conviction.

Now, I multiply ‘the Morning’s Conviction (Odds 7.05)’ by ‘the Afternoon’s Reversal (0.244).

$$\text{Final Odds} = 7.05 \times 0.244 \approx \mathbf{1.72}$$

Converting to probability:

$$P(H_T | D_1, D_2) = \frac{1.72}{1+1.72} \approx \mathbf{63.2\%}$$

The truth converges.

The probability graph in my head fluctuated. I urgently stopped the call to the maintenance team.

“Wait, stand by. Do not tear open the heater yet.”

I wiped my sweat and leaned back in the chair. In the morning I was 87% sure, but now it has dropped sharply to 64%. Temperature is still suspicious, but the possibility of it being ‘Pressure’ has revived to 36%. If I had excitedly torn open the heater earlier, I would have replaced only a perfectly fine heater and missed the pressure problem that might be the real cause. The factory almost wasted the most important time and spent money in vain.

“Foreman, let’s pull just 50 more samples from the next Lot. If there is just one more piece of data… we can catch it for sure.”

When data accumulates, the fog lifts and the truth is revealed. The truth converges as data accumulates. That is the way Bayes taught us.

I listened to the heartbeat of the machine again. We do not hastily shout, “The culprit is you!” We just update to the ‘probability closest to the truth’ while watching the constantly incoming data.”

Core Insight of this Scenario

- Quantification of Intuition: The moment you change the feeling of “Seems like a temperature problem?” into the number P(H)=0.6, management becomes possible. It is okay even if 0.6 is not the truth at that moment. It learns as data accumulates and continues to update. Because eventually, it will converge to the truth.

- Weight of Data (LLR): The 5/50 defects was strong evidence (+1.55), but the second 1/50 good result becomes equally strong counter-evidence (-1.39) and balances it.

- Dynamic Decision Making: The Bayesian perspective is not a fixed conclusion. The humble and flexible attitude of “With the information so far, I know up to here” saves the engineer from mistakes.

[Guide] Math Commentary, Python Code Appendix

In this tense investigative drama, we have already experienced the core 4 steps of Bayesian statistics.

- Prior Probability (Prior): “Looking at the past, it seems like he is the culprit?” (Initial Belief)

- Likelihood: “The evidence at the scene fits his style perfectly!” (Suitability of Evidence)

- Bayes Factor: “How many times more does this evidence support B than A?” (Weight of Evidence)

- Posterior Probability (Posterior): “Let’s modify my belief by reflecting the evidence.” (Final Conclusion

And the most important thing. Today’s posterior probability becomes tomorrow’s prior probability. This is precisely the essence of Learning

- Math: This story is not a simple episode. It is a castle built upon thorough mathematical calculation. I will reveal the mathematical Blueprint in a separate appendix post on how the ‘Traditional Bayes’ Theorem’ learned in textbooks is converted into ‘Odds and Bayes Factors’ used in the field.

- Python Code: You can refer to another appendix post that has turned the scenario of the main text directly into Python code.